Welcome to my series on deploying a secure k3s instance! Throughout this series, i’ll guide you through the process of deploying and maintaining a k3s cluster securely. From the initial setup to implementing ongoing security measures, i’ll cover all the essentials for creating your own development environment and hosting your services securely.

In this series i will cover the following points:

- Introduction to k3s: Explore what k3s is, its benefits, and why it’s a valuable tool for Kubernetes deployments.

- Installing k3s: Step-by-step guide on how to install k3s and rancher.

- Kubernetes Hardening: Dive into the initial configuration steps post-installation, such as setting up a basic cluster and configuring network settings.

- Updates and Maintenance: Configure your k3s cluster to be up-to-date with security patches and performing regular maintenance tasks.

- Monitoring and Logging: Deployment and configuration of monitoring services for your k3s cluster.

- Development Enviroment: Deploy your own development enviroment with your own repository, CI/CD engine and security.

Each post can delve into specific aspects of deploying and securing a k3s instance, providing readers with a comprehensive understanding of how to create a secure development environment using k3s.

Lets start !

Introduction to K3s 📖

What is K3S ?

k3s is a lightweight Kubernetes distribution designed for resource-constrained environments, such as edge computing, IoT devices, development environments, and small-scale deployments. Developed by Rancher Labs, k3s aims to provide a simplified Kubernetes installation process without compromising essential Kubernetes features and functionalities.

Why K3S over K8S ?

k3s, a lightweight Kubernetes distribution, is preferred over traditional Kubernetes (k8s) for several reasons:

Lightweight: k3s is optimized for minimal resource consumption, making it suitable for environments with limited memory and processing power.

Portability: Due to its lightweight nature, k3s can be deployed across a wide range of platforms, including IoT devices, edge servers, and cloud instances. This flexibility makes it suitable for diverse deployment scenarios.

Simplified Installation: Setting up a k3s cluster is much simpler than deploying a full-fledged k8s cluster.

Ease of Management: k3s simplifies cluster management tasks, such as updates and maintenance. Its lightweight design and simplified architecture make it easier to manage and operate compared to k8s.

In summary, k3s offers a lightweight, simplified alternative to traditional Kubernetes distributions like k8s. It is well-suited for environments where resource efficiency, simplicity, and ease of management are important considerations.

Why having your own k3s cluster is interesting?

Having your own k3s cluster is valuable primarily because it provides an excellent platform for learning about Kubernetes and container orchestration. With hands-on experience, you can experiment, explore, and gain a deep understanding of Kubernetes architecture, configuration, and deployment practices.

Moreover, owning your k3s cluster allows you to deploy personalized services and establish a customized development environment to create your personal projects in the most professional way possible.

Agent vs Server

Server Node

Server nodes in k3s act as the control plane for the Kubernetes cluster.

They manage the cluster state, store configuration data, and handle API requests from clients. Server nodes are responsible for tasks such as scheduling pods, maintaining cluster-wide networking, and managing cluster-wide resources.

Agent Node

Agent nodes, also known as worker nodes, are responsible for running the workloads and containers in the Kubernetes cluster.

They receive instructions from the server nodes and execute tasks such as running pods, managing container lifecycles, and communicating with the Kubernetes API server. Agent nodes do not participate in the management of the cluster state

In our case, for our enviroment we will use only 1 server node, in case you have more machines avalible you can check the High-avaliablity K3s guide from the k3s team

Deploy your own K3s cluster

Prerequisites

Resources 🪵

This are the resource requirements for k3s:

| Spec | Minimun | Recommended |

|---|---|---|

| CPU | 1 core | 2 cores |

| RAM | 512 MB | 1GB |

Architecture 🏗️

K3s is available for the following architectures:

- x86_64

- armhf

- arm64/aarch64

- s390x

Networking 🛜

In case you want to connect various nodes follow K3s team networking recommendations

Deployment

In our case we are going to deploy the most basic k3s deployment, 1 server node only.

This node will be deployed in the oracle free cloud instance with:

| Spec | Value |

|---|---|

| CPU | 4 vCPU |

| RAM | 24 GB |

| Storage | 200 GB |

This is plenty of resources for what we want to do.

To get your free oracle instance check out this guide to set up your own instance in oracle cloud

After successfully deploying k3s, the next step will be installing Rancher, a powerful management tool for Kubernetes clusters using Helm.

K3s

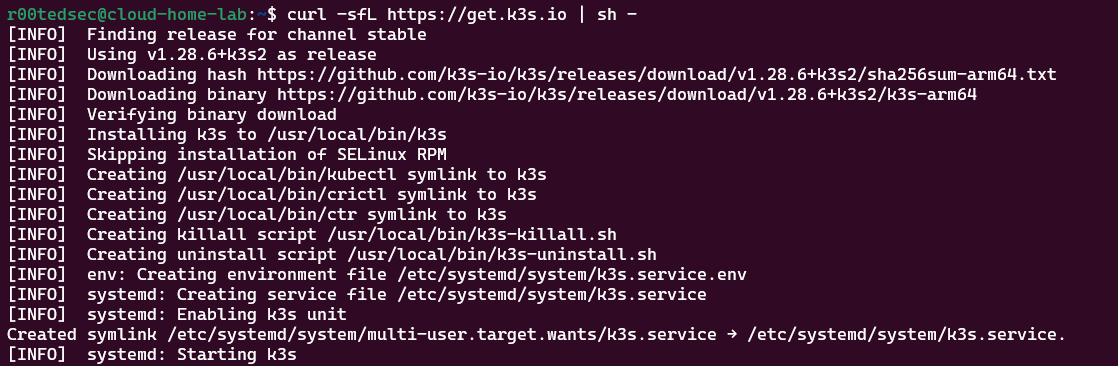

We are going to deploy the k3s latest stable version using the quick set-up script provided by the k3s team

| |

To be able to administer the k3s cluster change the ownership of the k3s.yml file using chown:

| |

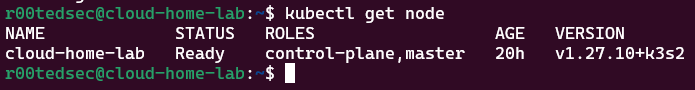

Verify the cluster is running properly:

| |

If this is the first time you are using the kubectl tool, check out this guide

After running this installation:

- The K3s service will be configured to automatically restart

- Additional utilities will be installed, including kubectl, crictl, ctr, k3s-killall.sh, and k3s-uninstall.sh

- A kubeconfig file will be written to /etc/rancher/k3s/k3s.yaml and the kubectl installed by K3s will automatically use it

Now you have a fully functional k3s cluster to start, congrats ! 🥳🥳

Helm

Helm is like APT for Kubernetes. It helps you find, install, and manage applications on your Kubernetes cluster using pre-made packages called charts. With Helm, you can easily customize and deploy apps, manage dependencies between them, and keep track of different versions. It’s a convenient tool that simplifies the process of working with Kubernetes and saves you time when deploying applications.

Deployment

Download the Helm installation script from the official Helm GitHub repository using curl:

| |

Ensure that the downloaded script has the necessary permissions to be executed:

| |

Execute the Helm installation script to install Helm on your system:

| |

Configure the KUBECONFIG environment variable to point to the kubeconfig file of your k3s Kubernetes cluster. This step allows Helm to interact with your Kubernetes cluster seamlessly:

| |

After completing these steps, we are ready to use Helm to manage and deploy applications on your Kubernetes cluster.

Check out this video to learn more about Helm!

Rancher

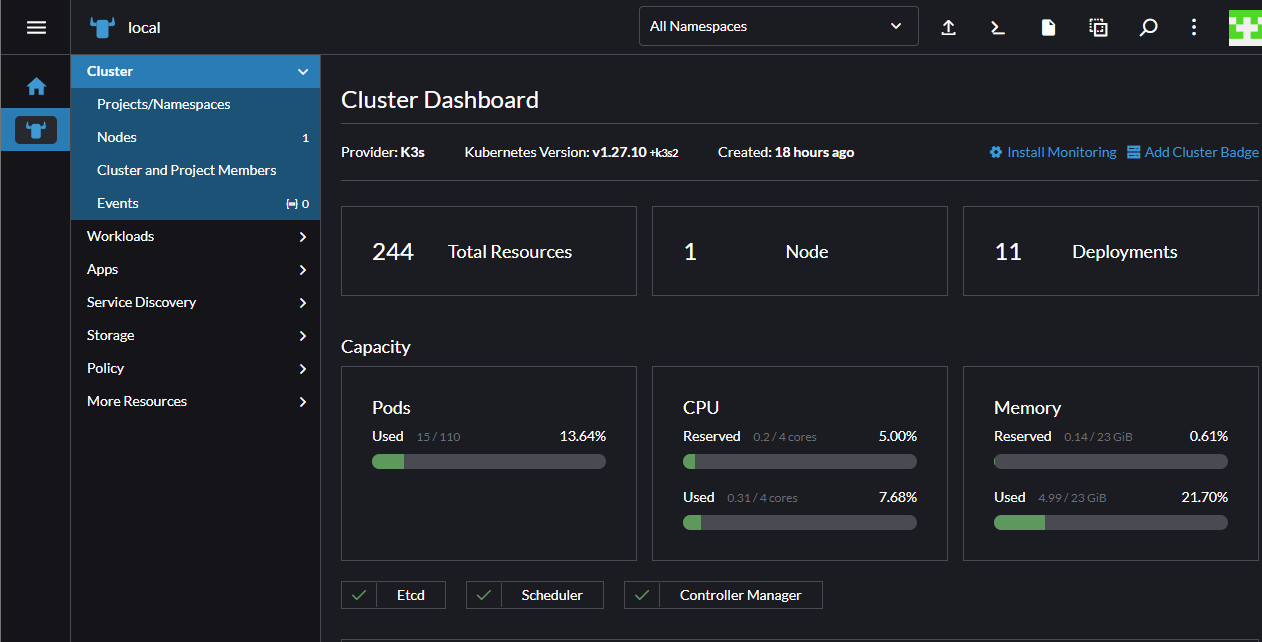

Rancher is a open-source tool that helps to manage and organize containerized apps across different setups. It’s like a one-stop-shop for dealing with multiple Kubernetes clusters, making tasks like setting up infrastructure, deploying apps, and keeping an eye on performance. Plus, it’s got built-in security features 🦺

Deployment

Add the Rancher Helm repository to your local Helm installation.

| |

Create a Kubernetes namespace called cattle-system where Rancher will be deployed.

| |

Replace <your-selected-hostname> with the hostname you want to use for Rancher, and <password> with your desired bootstrap password.

| |

For TLS configurations check Rancher reference , in my case im going to use a self signed certificate, because im using cloudflare to set a valid certificate between rancher and the user.

You should be able to access rancher at

https://<your-hostname>

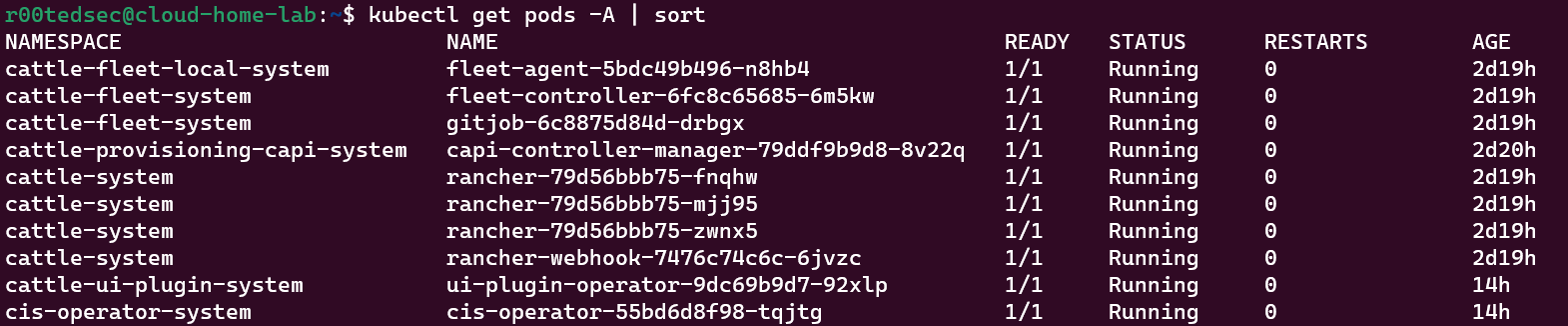

That’s it! Rancher should now be successfully deployed in your Kubernetes cluster. You can access it using the provided hostname and bootstrap password.

Conclusions

That wraps up the initial post in the series! As you’ve witnessed, setting up your own k3s cluster doesn’t have to be daunting, and having Rancher deployed will streamline cluster management and service deployment.

In the upcoming post, i’ll delve into hardening your existing cluster and leveraging Kubernetes’ built-in security features.

Stay connected on my social media channels, and feel free to suggest future post topics!